Reflections of the Universe: A multimodal adaptive affective audio-visual experiment

2021

A multimodal adaptive affective audio-visual experiment.

This project was a part of a course in realtime interaction and interfaces. I was a part of a small group, and our goal was to merge deep space-inspired artistic interactive installations with novel interaction modalities inspired by state-of-the-art research in affective computing. We aimed to take users on an interactive audiovisual journey reflecting the emotional presence of outer space environments, exposing future possibilities of space exploration. This was achieved through creating a multimodal adaptive affective prototype, utilizing advanced computer vision concepts for an audiovisual output.

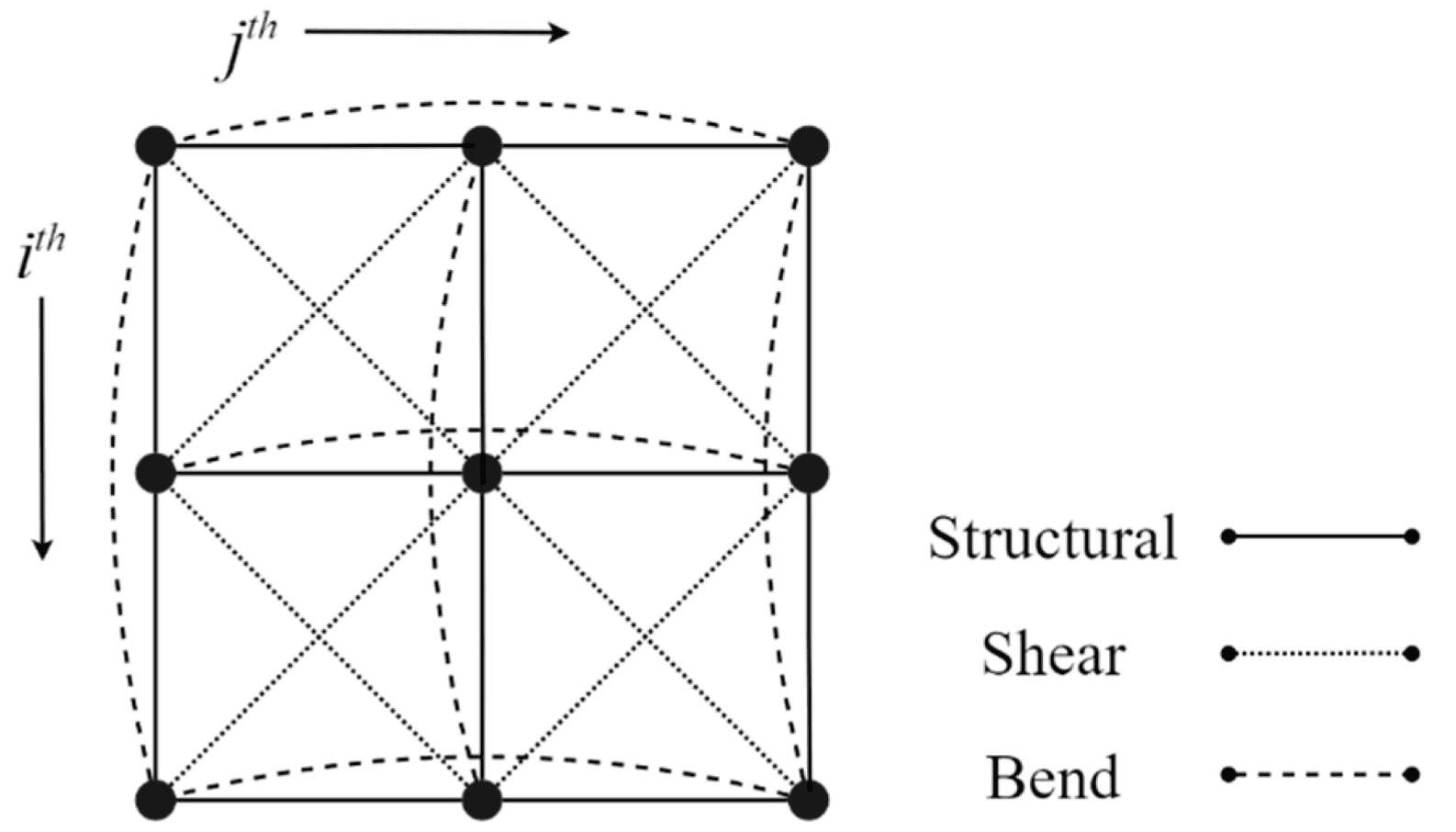

The audio was created using a virtual modular synthesizer, allowing multiple modules to be connected for real-time synthesis of sounds that changed based on a set of measured user parameters. The visual output featured a three-dimensional computational geometry of generic simulated flocking boids in an outer space environment.

Real-time interactions of the system included generative adaptive visuals and soundscapes that changed based on the user’s estimated heartbeat and emotional state. We employed sensor-, visual-, and audio-based modalities as proposed by Karray et al. Specifically:

- Sensor: A live webcam feed provided continuous data, analyzed using advanced computer vision algorithms to predict various physiological states of the user, introducing multimodal ambiguity by interpreting data from a modality more than once.

- Visual: A computer screen or television displayed a 3D outer space environment combined with a basic flocking boid model, facilitating natural affective interaction between the user and the computer.

- Audio: Generative audio synthesis using a Eurorack Simulator served as the auditory modality, enabling real-time changes in the system’s sound output based on interpreted physiological states from the live video feed. These modalities affected the real-time interaction, estimating the user’s heartbeat and emotional state, as illustrated in our data tables and figures.

My role

Within my role, my favorite part was implementing the flocking boid behavior and the OSC communication between each platform used: Unity, VCV Rack for audio, and Python.